We’re once again at the end of our internship season, and it’s my task to provide a few highlights of what the interns accomplished while they were here.

And once again, the program was bigger than ever. We had 122 dev interns globally, coming from 42 different schools and 13 countries. As the program grows, it gets harder and harder to summarize it with any degree of fidelity by picking just three projects.

But, choose we must! So here are my somewhat arbitrarily chosen example projects:

-

Aaron Lamoreaux extended magic-trace to support a sampling mode, along with several other improvements.

-

Matthew Sirman worked on integrating Datafetcher, a library for writing testable and efficient data-loading-and-transformation tasks, with Incremental, a library for building on-line algorithms.

-

David Vulakh extended our Quickcheck property-based testing library with a convenient syntax for specifying contracts to simplify the testing of complex APIs.

Now, let’s dive in to the details!

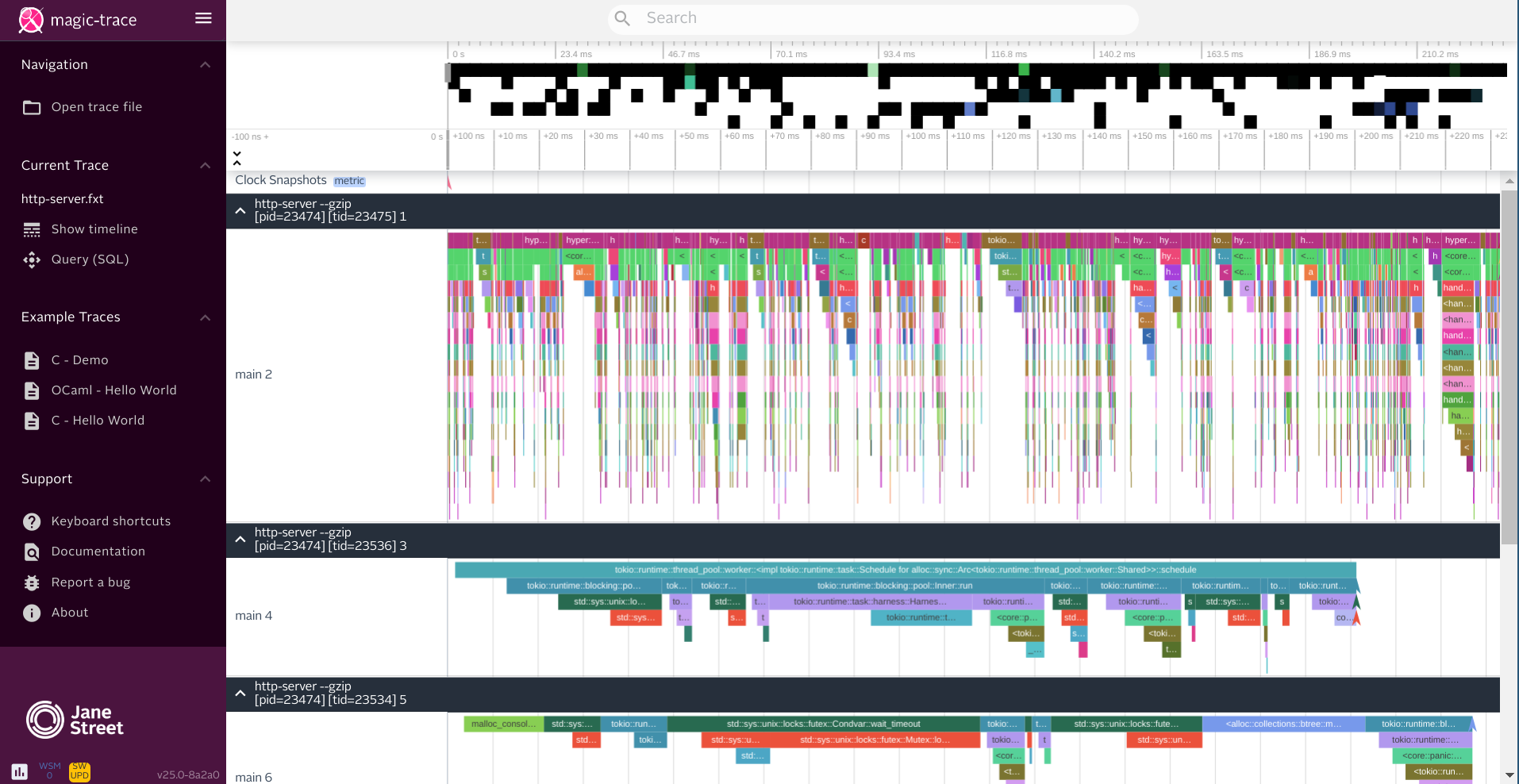

Sampling-mode for magic-trace

magic-trace is an open-source tool we built that takes advantage of Intel Processor Trace (Intel PT) to provide hyper-detailed traces of everything a program did for a small window of time, around 10ms.

The initial version of magic-trace was implemented as an intern project (briefly mentioned here), and we’ve since open-sourced it and made it available on Github.

Part of what makes magic-trace great is the enormous detail afforded by Intel PT’s ability to cheaply write down every branch taken by a process. But another one of magic-trace’s super-powers is its extreme ease-of-use. Lots of performance analysis tools require you to figure out the right arcane invocation, and then stare at some confusing text output to divine what’s going on.

magic-trace tries really hard to get rid of all that noise. The command-line tool does sensible things by default, and instead of staring at an arcane blog of text, it’s integrated with a modified version of Perfetto, a pretty and intuitive trace-viewer from Google.

Aaron Lamoreaux’s primary project was to extend magic-trace to cover more territory, while still maintaining magic-trace’s great user-experience. In particular, Aaron added a sampling mode to magic-trace, so, rather than being able to see everything that happened in the 10ms leading up to a given event, you could instead get sampled data for the last 20 seconds before an interesting event, or even 20 minutes. Another win is that sampling can be done on AMD boxes, while Intel PT is an Intel-only feature.

A lot of the hard work here about getting the user experience right, and in particular figuring out how to pick good default behaviors depending on what users are doing and what hardware they’re running on. E.g., picking a reasonable sampling rate, or deciding whether to use LBR or DWARF when interpreting stack traces.

The end result hasn’t yet been rolled into production, but some folk from our Marketdata team have already put it to good use, figuring out that one of our packet-processing applications was wasting a lot of time in the GC due to allocating something on each packet. magic-trace made it trivial to see the problem, and to figure out where the stray allocation was coming from.

While sampling was his main project, Aaron got some other magic-trace improvements out too, like:

-

multi-process tracing, so you could see combined traces from two applications running on the same box, and see what happens as data flows from one to the other.

-

CPU-frequency transition reporting, so you can see when a use of AVX2 instructions causes you to stall for 14µs!

-

Optimizing magic-trace’s trace decoding step by 10%-50% (depending on the trace) by writing a dlfilter back-end for perf.

Marrying Datafetcher and Incremental

Datafetcher is a library that we talked about in last year’s “wrought” post, which is designed to help you write jobs that fetch and transform data. Critically, it understands the structure of the job its running, which lets it both optimize the execution, e.g., by batching requests, and helps you build tests by automatically recording external data so that you can replay it in your tests.

A traditional datafetcher program is a batch job: E.g., grab a bunch of data from different services, munge it all together, and produce configs for your desk’s trading infrastructure.

But sometimes, we want to build systems that look at both static data sources and live marketdata. Today, if we build such a computation in Datafetcher, we’d have to run it over and over in a loop, which is really slow!

Matthew Sirman’s task was to figure out how we could make Datafetcher jobs that could respond efficiently to live data, without having to be rerun from scratch. In particular, he did that by combining Datafetcher with a library called Incremental.

We’ve talked about Incremental a lot over the years, but the basic point of Incremental is to help you build programs that can be refreshed cheaply when their inputs change.

Incremental and Datafetcher are in some ways pretty similar: they both organize your computation as a graph, tracking dependencies between different stages of the work; and they use similar APIs for doing so, both taking advantage of our syntax extension for making such computations easier to read and write.

But they’re also really different!

- Datafetcher provides batched and testable all-at-once execution of asynchronous programs

- Incremental provides incremental execution of synchronous programs.

Why not both? Can we build a system that gives us batched, testable, and incremental execution of an asynchronous program, by essentially compiling a Datafetcher program into an Incremental computation?

We can, and doing that was the core of Matthew’s project.

There were a lot of things to do along the way. One step was to figure

out how to write an incremental computation that could deal with

asynchrony. This required a way of representing an in-process

Incremental computations. The solution here was to add a new type

(called Response_or_waiting) that represented the current state of

an asynchronous computation.

Figuring out when and where to memoize was another challenge. For intermediate spots in the computation, the decision was to give users the ability to explicitly decide where memoization should occur. For fetch-nodes, memoization was important for correctness and so was not optional, so that computations would behave consistently, even in cases where re-issuing the same fetch request could lead to different results.

There were other issues too, like figuring out how to conveniently test incremental datafetcher jobs, and how to automatically convert ordinary datafetcher jobs (which get the data just once) into incremental ones, which will continue to update via polling.

In addition to working on extensions to the Datafetcher library, Matthew got to utilize those extensions to improve the desk-application that motivated this work in the first place, getting to see the end-to-end application of his work. It’s not quite in production yet, but the results look good so far.

Contracts for Quickcheck

Testing is something we spend a lot of time on here, and over the years, we’ve built a lot of tools to make testing easier and more pleasant.

One of those tools is our Quickcheck library. Quickcheck helps you with property-based testing, which is a style of testing where you marry together a collection of properties you want to test with a mechanism for generating random examples to test with.

Quickcheck aims to make this kind of testing easy. It does this primarily by providing libraries for building probability distributions, combined with a syntax extension that obviates most of the boilerplate of using those libraries. Here’s an example.

type shape =

| Circle of { radius: float }

| Rect of { height: float; width: float }

| Poly of (float * float) list

[@@deriving quickcheck];;

Given the above, ppx_quickcheck will generate a probability

distribution automatically. That distribution makes a bunch of

choices (like equiprobably returning a Circle, Rect, or Poly),

but you can add more annotations to tweak the distribution if you need

to.

Even with all this, there’s still a decent amount of work required to set up each test for each property you want to validate. That’s where David Vulakh’s project came in. David added a new syntax for reducing the boilerplate further. In particular, his syntax extension lets you specify explicit contracts associated with each call in an interface, and the rest of the test generation can be driven from that.

This is maybe easiest to explain with an example. Here’s a subset of

the String API in Base, to

which this contract syntax has been applied:

val to_list : t -> char list

[@@contract fun t -> equal t (t |> to_list |> of_char_list)]

val length : t -> int

[@@contract fun t -> length t = List.length (to_list t)]

Note that contracts can use multiple functions from the same API to

express their property. In the case of to_list, the contract checks

that if you take a string, convert it to a list of characters, and

then back into a string, the result is equal to the string you started

with.

The way in which these contracts are exercised is pretty simple: regular Quickcheck generators are used to create all of the inputs for a given function, and then the function is called on them, and the property is checked, signaling an error if the property fails.

This use-case is pretty simple, but there are some complicated corners that needed to be figured out, like providing more annotations to allow customizing the probability distributions used for particular arguments.

After finishing up the contract work, David then worked on another extension to Quickcheck, which is support for bisimulation-style tests. The idea of bisimulation is to have two different implementations of the same API, and to test them against each other. What’s tricky here is that it’s not enough to generate random inputs and call each function; you actually need to call sequences of operations to build up the values that you’re operating on. This is especially useful for tricky, performance sensitive data-structures, where it’s easy to write a correct-but-slow version, and really quite hard to write a correct and well-optimized one.

There’s a bit more work to do to get the bisimulation mode to the point where it really covers everything we need, but it’s already at a stage where it’s useful for real examples.

So, what are you waiting for?

Summarizing the whole summer in just a handful of projects is an impossible task, but I hope this gives you a flavor of the kinds of things that our interns are able to accomplish. (And I should mention: this work only reflects part of their work! Each intern actually works in two different areas over the course of the summer.)

If this sounds like the kind of work you’d like to do, then you should apply. The internship is a great chance to learn, both about software engineering, and about the world of trading. And if you’re curious about our interview process, check this out.