Last year we held a machine learning seminar in our London office, which was an opportunity to reproduce some classical deep learning results with a nice twist: we used OCaml as a programming language rather than Python. This allowed us to train models defined in a functional way in OCaml on a GPU using TensorFlow.

Specifically we looked at a computer vision application, Neural Style Transfer, and at character-level language modeling.

OCaml Bindings for TensorFlow

TensorFlow is a numerical library using data flow graphs. It was originally developed by Google and was open-sourced at the end of 2015. Using it, one can easily specify a computation graph and execute it in an optimized way on multiple CPUs or GPUs. A typical use case is neural networks, which are easily represented as computation graphs. TensorFlow can then compute some symbolic gradients for the graphs which makes it easy to minimize some loss functions.

Some TensorFlow OCaml bindings make it possible to use TensorFlow from OCaml programs. These bindings also provide an API that makes it easy to describe complex neural network architecture in a functional way.

To illustrate this here is a simple implementation of VGG-19, a classical Convolutional Neural Network (CNN) architecture from 2014.

let vgg19 () =

let block iter ~block_idx ~out_channels x =

List.init iter ~f:Fn.id

|> List.fold ~init:x ~f:(fun acc idx ->

Fnn.conv2d () acc

~name:(sprintf "conv%d_%d" block_idx (idx+1))

~w_init:(`normal 0.1) ~filter:(3, 3) ~strides:(1, 1) ~padding:`same ~out_channels

|> Fnn.relu)

|> Fnn.max_pool ~filter:(2, 2) ~strides:(2, 2) ~padding:`same

in

let input, input_id = Fnn.input ~shape:(D3 (img_size, img_size, 3)) in

let model =

Fnn.reshape input ~shape:(D3 (img_size, img_size, 3))

|> block 2 ~block_idx:1 ~out_channels:64

|> block 2 ~block_idx:2 ~out_channels:128

|> block 4 ~block_idx:3 ~out_channels:256

|> block 4 ~block_idx:4 ~out_channels:512

|> block 4 ~block_idx:5 ~out_channels:512

|> Fnn.flatten

|> Fnn.dense ~name:"fc6" ~w_init:(`normal 0.1) 4096

|> Fnn.relu

|> Fnn.dense ~name:"fc7" ~w_init:(`normal 0.1) 4096

|> Fnn.relu

|> Fnn.dense ~name:"fc8" ~w_init:(`normal 0.1) 1000

|> Fnn.softmax

|> Fnn.Model.create Float

in

input_id, model

As an example of Recurrent Neural Network (RNN) a Long Short-Term Memory (LSTM) unit is also pretty easy to define.

let lstm ~size_c ~size_x =

let create_vars () =

Var.normal32 [ size_c+size_x; size_c ] ~stddev:0.1,

Var.float32 [ size_c ] 0.

in

let wf, bf = create_vars () in

let wi, bi = create_vars () in

let wC, bC = create_vars () in

let wo, bo = create_vars () in

Staged.stage (fun ~h ~x ~c ->

let h_and_x = concat one32 [ h; x ] in

let c =

sigmoid (h_and_x *^ wf + bf) * c

+ sigmoid (h_and_x *^ wi + bi) * tanh (sigmoid (h_and_x *^ wC + bC))

in

let h = sigmoid (h_and_x *^ wo + bo) * tanh c in

`h h, `c c)

Adding some Type Safety to TensorFlow

On the OCaml side a node of the TensorFlow computation graph has a type

'a Node.t where 'a represents the kind of value that the node contains

encoded as a polymorphic

variant.

For example a node could have type [`float] Node.t if the associated value

is a tensor of single precision floating point values.

The OCaml code wrapping TensorFlow operations can then specify the kind of nodes that are used and returned depending on the operation. For example the square root function has the following signature:

val sqrt

: ([< `float | `double ] as 't) t

-> ([< `float | `double ] as 't) t

This signature specifies that sqrt takes as input a single node containing

single or double precision floats. The resulting node contains values of the

same kind. Another example could be the greaterEqual comparison operator

which signature is:

val greaterEqual

: ([< `float | `double | `int32 | `int64 ] as 't) t

-> ([< `float | `double | `int32 | `int64 ] as 't) t

-> [ `bool ] t

This specifies that greaterEqual takes as input two nodes. These

nodes have to contain the same kind of values, and this kind can be single or

double precision floats or 32/64 bits integers. This operation then returns

a tensor of boolean values.

So thanks to these signatures sqrt (greaterEqual n1 n2)

would raise a compile time error as the input of sqrt contains boolean

values.

Specifying these function signatures manually would be tedious so this wrapping OCaml code ends up being generated automatically from the operation description file provided in the TensorFlow distribution.

The Node.t type does not encode the dimensions or even the shape of the

embedded tensor. Including some shape information could probably be useful

but would result in more complex function signatures.

Using a Convolutional Neural Network for Neural Style Transfer

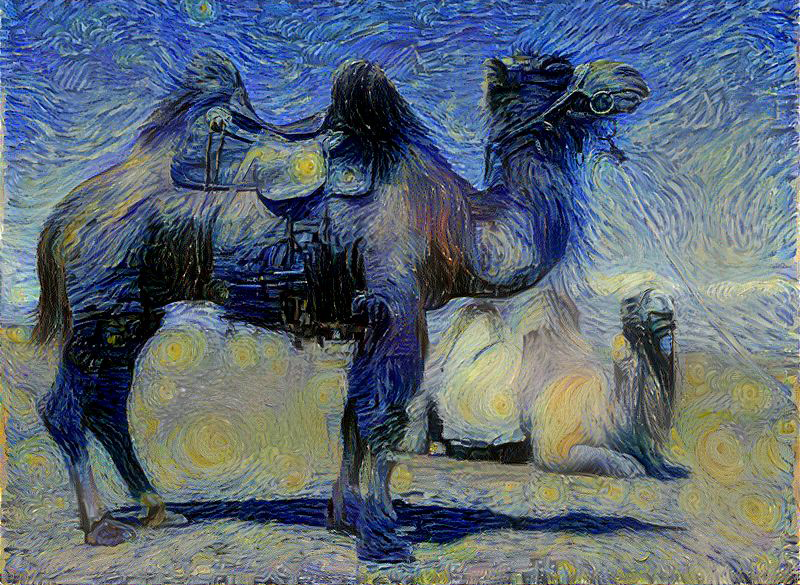

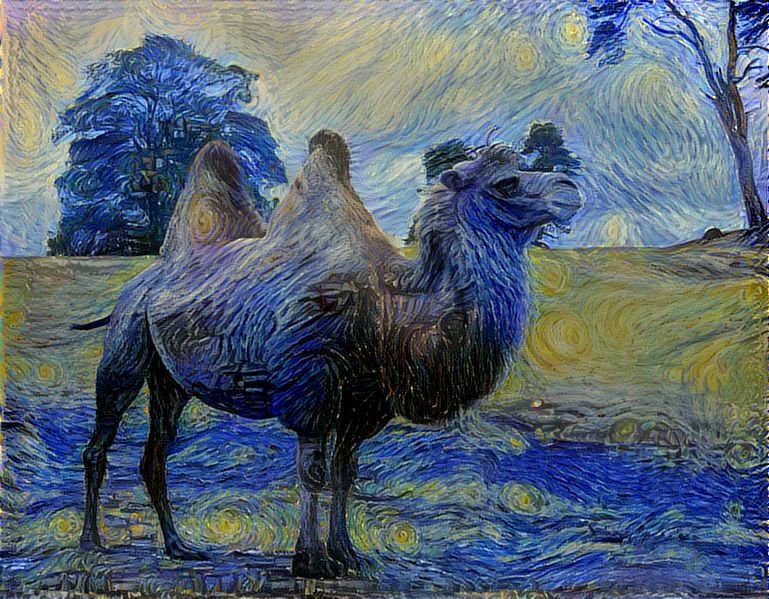

Neural Style Transfer was originally introduced by Gatis, Ecker, and Bethge in 2015 in A Neural Algorithm of Artistic Style. This paper describes how to combine a content image and a style image to generate a new image.

The idea is to use a CNN to extract some features from an image. Informally a CNN stacks multiple layers between the input image and their output (which could for example be the class the input image belongs to). The layers that are close to the input image extract low level features, e.g. a vertical line. The ones that are further away extract higher level features, e.g. fur if it comes to recognizing an animal or a tire if it comes to recognizing a car.

As we want to have the same content as the content image, the final image should have similar high level features at the same positions. For style, the final image should have the same low level features as the style image, but position is not important here. We just care about having the same intensity on the different low level features.

In order to experiment with this we used a pre-trained VGG-19 model. The actual implementation and details on how to run it can be found on GitHub.

As an example we took as input a camel picture from wikimedia and as style image Van Gogh’s famous The Starry Night. A generated image using Neural Style Transfer can be seen below.

Generating OCaml Code using a Recurrent Neural Network

Andrej Karpathy wrote a very nice blog post on how RNNs can be used for character based language modeling. In this setting a RNN is trained to predict the next character probability distribution on some very large corpus. Once trained the RNN is given some initial empty memory and is used to randomly generates a character based on the next character probability distribution. The generated character is then given as input to the RNN as well as the updated RNN memory to generate the following character and so on.

More details are available in the original blog post and can be seen in the blog post implementation, as a fun application the author used a corpus made of all the works of Shakespeare and was able to generate samples that look very similar to some actual Shakespeare.

Once again we wrote an OCaml based implementation of the same idea, this uses two LSTM stacked on each other. The actual implementation can be found on GitHub as well as instructions on how to run it. As an example we trained a network on the concatenation of the OCaml files from the Base GitHub repo. This resulted in a corpus of 27 thousand lines. We then used the trained network to generate some random text that looks like OCaml code.

(** Generic date don't only then of the set operations *)

let to_int x = Conv.int64_to_int32_exn x.count arg.init ts

let init =

match state with

| `Ok x -> x

| `Duplicate_key key ->

Or_error.error_string "int64_negative_exn" l1 l2 l3 ~f:(fun x ->

let new_acc, y = f !acc x in

acc := new_acc;

y)

in

!acc, result

;;

let partition_tf t ~f =

let t = create () in

enqueue t a;

t

;;

The resulting code is far from compiling but it gets a bunch of things right, like comments being properly opened and closed and containing text that looks like English.

Concluding Remarks

It was fun to play with OCaml and replicate some well known results in this setting. However it should be noted that TensorFlow in OCaml has some rough edges.

- The machine learning library ecosystem in OCaml is nowhere near as well developed as it is in Python.

- There are very few resources for learning how to do machine learning in OCaml, which is in stark contrast to Python.

- Shape errors are difficult to debug, since the OCaml bindings don’t provide proper line numbers for where the mismatch is coming from. Hopefully this could be fixed by switching the bindings to use the new TensorFlow eager mode.

That being said using OCaml has some nice properties too.

- Type-safety helps you ensure that your training script is not going to fail after a couple hours because of some simple type error.

- As noted by Christopher Olah in this blog post there are some natural typed representations of neural networks which work nicely with functional programming languages, especially for Recurrent Neural Networks.

The banner at the top of this page has been generated based on this wikimedia image.