I recently ported the Hardcaml_step_testbench

library, one of the libraries that

we use at Jane Street for Hardcaml simulations, from using monads to using algebraic

effects, a new OCaml 5 feature. This blog post walks through what algebraic effects are,

why you should consider using them in lieu of monads, and how to actually work with them

using the Handled_effect library. One

thing I’ve come to believe is that most of what can be done with monads can be done with

algebraic effects in a much more elegant way.

Algebraic effects were originally added to OCaml for general-purpose concurrent execution of programs for OCaml 5, which supports thread-level parallelism. The fact that they can be repurposed for Hardcaml simulations speaks to how well-thought-out and general a language feature this is.

I am writing this post as someone who is not a type-theory expert. The fact that I can use algebraic effects without fully understanding the underlying mechanics is one nice feature of their design.

(The library is still named Oxcaml_effect on github. We are in the process of

renaming it to Handled_effect.)

What’s wrong with monads?

Monads have been used by OCaml programmers for a long

time to model computation. Jane Street’s own monadic Async

library, which is used for

concurrent programming, powers a lot of our infrastructure. Why would we want to replace

it?

Reason 1: Monads infect your code and make everything harder to read

Recall that monads have the following type signature:

(* This is part of the [Monad.S] module type *)

type 'a t

val return : 'a -> 'a t

val bind : 'a t -> f:('a -> 'b t) -> 'b t

val map : 'a t -> f:('a -> 'b) -> 'b t

Suppose we are interacting with a server that helps us accumulate numbers. Using the

Deferred.t monad from Async as an example, an interface might look something like this:

type txn

val start_transaction : unit -> txn Deferred.t

val send_number : txn -> int -> unit Deferred.t

val wait_for_completion : txn -> int Deferred.t

You could use these in a bit of code like so:

let send_numbers_to_server n =

let%bind txn = start_transaction () in

let%bind () =

Deferred.for_ 0 ~to_:(n - 1) ~do_:(fun i ->

let%bind () = send_number txn i in

printf "Sent number %d\n" i;

return ())

in

let%bind result = wait_for_completion txn in

printf "Transaction done: %d!" result;

return ()

;;

For those not familiar, let%bind x = foo () in bar () is some syntactic sugar that is

transformed into bind (foo ()) ~f:(fun x -> bar ()).

The Async monad is all over this code. let%bind () = surrounds operations that perform

an asynchronous operation; you need the noisy return () in a bunch of places to ensure

you finish on a Deferred.t type; and you have to use special versions of core library

functions like Deferred.List.iter—or here, Deferred.for_—instead of the normal versions,

because you must return a Deferred.t. Once you start using a monad, you’re effectively

trapped in it. All code that interacts with your usage must also consider the monad. It’s

annoying.

If we had a hypothetical Async library built with OxCaml algebraic effects instead, it might look something like this:

let send_numbers_to_server (h : Deferred.Handler.t) n =

let txn = start_transaction h in

for 0 to n - 1 do

send_request h txn i;

printf "Sent number %d\n" i;

done;

let result = wait_for_completion h txn in

printf "Transaction done: %d!" result;

;;

No special let% ppx; no returns; no monad-flavored standard library functions; and the

overall function returns a normal value that callers don’t have to treat specially.

Reason 2: You can’t use unboxed types and the local mode

Monads make it tricky to use some valuable OxCaml features, notably unboxed types and the local mode.

Consider the following piece of code:

open Core

open Unboxed

(* Assume [Bar] is some module that implements Monad.S and some utility functions *)

module Bar : sig

include Monad.S

val do_stuff : unit -> unit t

end

type foo =

{ a : int

; b : int

}

let do_thing () =

let foo @ local = { a = 1; b = 2 } in

let%map x = Bar.do_stuff () in

F64.of_int (foo.a + foo.b)

;;

The above code will not compile! To understand why, let’s consider what the code looks like when we run it through the PPX preprocessor:

let do_thing () =

let foo @ local = { a = 1; b = 2 } in

Bar.map (Bar.do_stuff ()) ~f:(fun () ->

F64.of_int (foo.a + foo.b)

)

;;

OxCaml introduces the notion of

layouts to types and

modes to values. There are many kinds of

layouts corresponding to the memory representation of the type, which is out of scope

here. In this case, we’re using a layout that’s backwards compatible with OCaml’s default

memory representation, namely the value layout. Modes on the other hand track the

properties of values. Here, the mode simply tracks whether a value is allocated in a

caller’s stack (it only lives in the caller’s region), or on the global heap (it is

managed by the garbage collector).

Once you add in the layout and mode annotations, our Bar.map has the following

signature:

val map

: ('a : value) ('b : value) .

'a t @@ global -> f:('a -> 'b) @@ global -> 'b @@ global

The two problems here are:

- The

foorecord is locally allocated. This means thatfcannot capture it in its closure environment, sincefhas to be globally allocated. - The return value of the closure,

f, has the layoutvalue.F64.t, which is the return type ofF64.of_int, unfortunately has the typefloat#, which is not compatible with the layout value.

The Tools & Compilers group at Jane Street have some work in

ppx_let that tries to get around this somewhat by

allowing f to be a locally allocated closure. This is a good enough solution for a lot

of cases, but not where you simply cannot have a locally allocated closure. One such

example is Async, which requires the closure to be

globally allocated, as the closure itself is passed to the Async scheduler as tasks to be

scheduled. Furthermore, it only actually solves the first of the two problems above.

Effects are a neat solution to this. They circumvent the need for globally allocated closures, as they shift the task of managing the “environment” of a particular context into the language runtime. This conveniently allows us to use various new fancy OxCaml features.

Effects have other upsides, like better stack traces and more seamless composability. (If you’ve ever tried to compose two monads together you will know how painful it can be.)

A layman’s introduction to Algebraic Effects

Effects can be thought of as primitives built into the language that allow you to suspend

execution in the control flow of a piece of computation and yield control to a scheduler /

runtime. When you “perform” an effect (more on that later), execution pauses and the

handler receives a continuation k—a first-class value representing “everything that

was going to happen after the perform.” From there you can continue with the underlying

value (the way you might after a let%bind in the Async monad), throw, or hold onto the

continuation for later.

We will walk through some simple examples, before going through more complicated examples for Hardcaml simulations.

If you are vaguely familiar with OCaml effects in the OCaml manual, you will notice that things look quite a bit different. The type-safe OxCaml effects API differs from the stock OCaml effects API.

Let’s start with a simple example to understand the API provided by Handled_effect.

Let’s say we want to build a library that allows us to perform a trivial computation that either increments or decrements a value. We want to end up with code like this:

let computation (handler : E.Handler.t @ local) =

let x = 1 in

let y = E.perform handler (Plus_one x) in (* suspends here *)

let z = E.perform handler (Minus_one y) in (* suspends here *)

print_s [%message (x : int) (y : int) (z : int)]

;;

let%expect_test "" =

run_computation computation;

[%expect {| ((x 1) (y 2) (z 1)) |}]

;;

This is the core “business logic”: it doesn’t know how Plus_one or Minus_one are

implemented. We’re just setting up the flow of the computation. In monadic Async terms,

the code above is like the code the user writes that’s peppered with let%binds. The

actual performing of the computation (which we’ll get to below) is like what the Async

scheduler does behind the scenes with that user code.

The E.Handler.t value, called an “effect handler”, can be viewed as an object that you

pass around to access the implementation for the effect E. (Notice that we have

annotated the handler argument with @ local. For now, just take my word for it that it

needs the local annotation for type-safety purposes. I will elaborate on why at the end of

the blog post.)

Let’s break down the other parts. You start by defining the possible effect operations. This is a GADT that specifies the possible operations that your computation can perform that will suspend execution. It can look something like this:

open Core

module Effect_ops = struct

(* One of the type arguments must specify the return

type of performing the effect; here, an int *)

type 'a t =

| Plus_one : int -> int t

(** An operation that when given [x], return [x + 1] *)

| Subtract_one : int -> int t

(** An operation that when given [x], return [x - 1] *)

end

(* We invoke the Handled_effect `Make` functor on our

module to Effect-ify it. *)

module E = Handled_effect.Make (Effect_ops)

The module E is the primary module users will interact with when working with effects.

There are many functions and types in there, which we will walk through in the example.

But below is a heavily simplified interface of the E module that we just constructed:

module E : sig

module Handler : sig

type t

end

(** Performs an operation in the context of running a computation. *)

val perform : Handler.t @ local -> 'a Effect_ops.t -> 'a @ once unique

(** Evaluates a computation *)

val run : (Handler.t @ local -> 'a) -> 'a Result.t

module Result : sig

type ('a, 'e, 'es) t =

| Value : 'a -> ('a, 'e, 'es) t

(** This is returned when the computation is finished. *)

| Exception : exn -> ('a, 'e, 'es) t

(** The computation raises an unhandled exception. We'll

ignore exceptions for the purposes of this blog post. *)

| Operation :

('o, 'e) op * ('o, 'a, 'e, 'es) continuation

-> ('a, 'e, 'es) t

(** This is returned when the computation calls

[E.perform operation]. The first argument is the operation

in question, and the second argument is a continuation object

that can be used to resume execution of the computation with

the result.

*)

end

end

So when you use Effects you’re really thinking about two pieces:

- The computation—this is your business logic. Computations have a type signature

E.Handler.t @ local -> 'a, where'ais the return value of the overall computation. - The operation handlers—this is the code that interprets what the operations mean.

When the computation calls E.perform, the execution jumps to the operations

handler. From the operations handler, you resume execution of the computation using the

continuation by calling Handled_effect.continue k.

Writing the computation is usually straightforward and not much different from writing regular code without Effects. Most of the complexity lies in writing the operation handlers. Here’s what it looks like in this example:

let rec handle_computation_result (result : (_, _) E.Result.t) =

match result with

| Value result ->

(* The computation has reached the end and returned the result *)

result

| Operation (op, k) ->

(* If we're here, the effect has suspended. The [op] type is the set of

operations the computation can perform as expressed by our [Effect_ops]

type. [k] is a continuation that the user can use to resume the

computation execution with [Handled_effect.continue]

*)

(match op with

| Plus_one x ->

handle_computation_result (Handled_effect.continue k (x + 1) [])

| Subtract_one x ->

handle_computation_result (Handled_effect.continue k (x - 1) []))

| Exception exn ->

(* In real examples, we would do smarter things with exceptions, but to keep

these examples easy to follow, we simply reraise them.

*)

raise exn

;;

let run_computation (type a) (computation : E.Handler.t @ local -> a) : a =

handle_computation_result (E.run computation)

;;

Algebraic Effects for Hardcaml simulations

The Hardcaml_step_testbench

library is a library we use for

FPGA simulations in Hardcaml. We have used this library for years to simulate FPGA

circuits. Recently, we added support for an effect-based API. It’s a nice demonstration

of what effects can get us in a domain that they’re not completely designed for.

We will walk through some example code that emulates the core behaviour of this library to showcase the effectful API. The actual library is much more featureful, but the core idea can be illustrated with a toy implementation.

A digital circuit can be abstractly thought of as a stateful component that consumes inputs and produces outputs atomically at every time step. Hardware designers call this time step a clock cycle, as physically it corresponds to a clock signal that is supplied to the circuit. An important distinction from software programs is that inputs and outputs are consumed at every clock cycle, whether or not the circuit is performing any higher-level application functionality.

(To the digital design engineers—I’m restricting this to synchronous single clock-domain circuits simulations. There are tricks we do to deal with multiple clock domains, but it’s not important for what we’re talking about here.)

Compiling these digital circuits into FPGAs can take on the order of hours (or closer to months for ASICs). We like getting faster feedback on the changes we make to our testbenches and hardware, so we write simulations for these circuits.

In running digital circuit simulations, we are trying to synchronize two interacting components:

- The circuit itself

- Testbench threads that synchronize and interact with the circuit

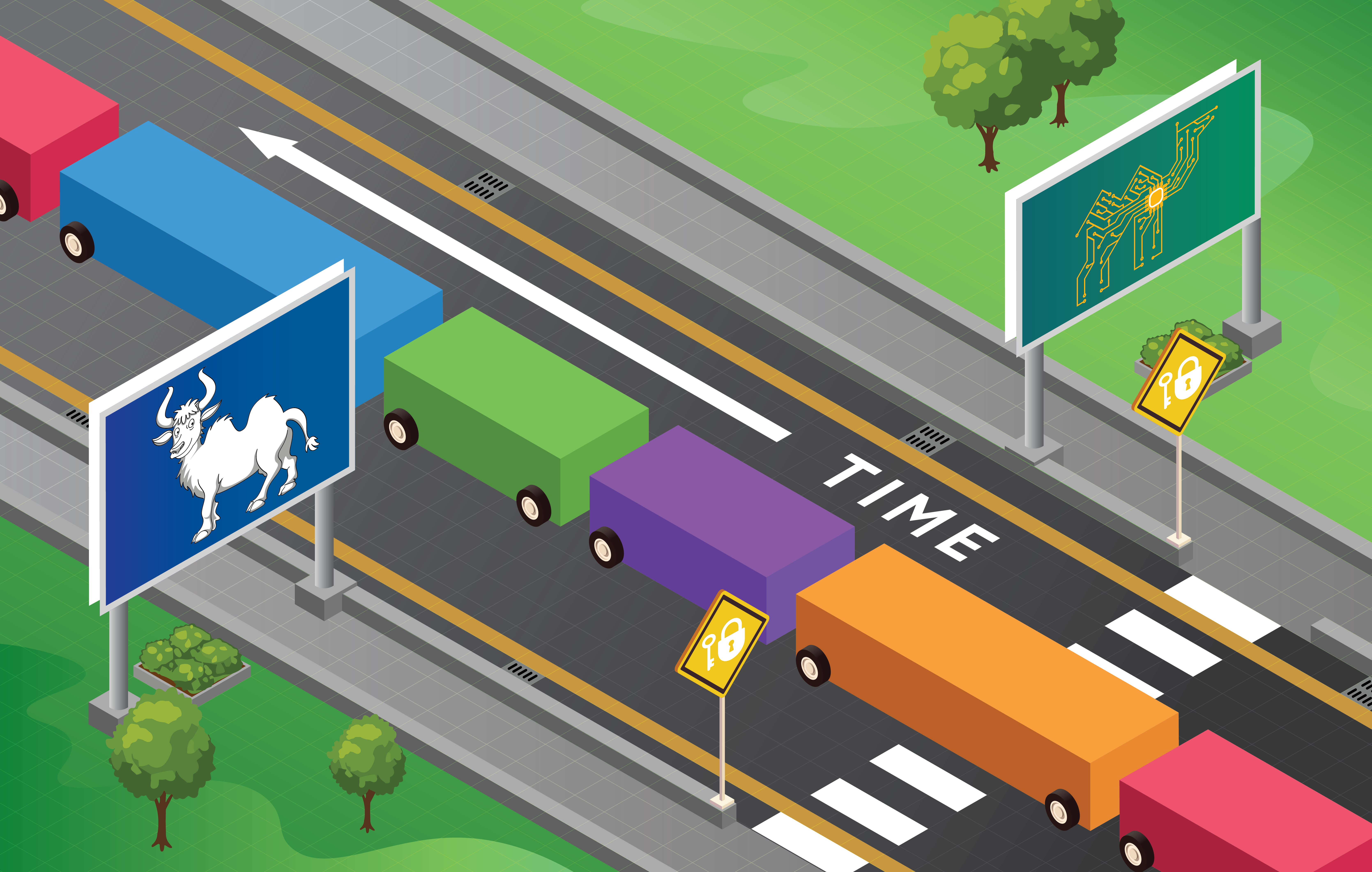

The execution flow of the testbench and simulated circuit looks something like this:

In practice, we oftentimes have multiple threads of execution in our circuit testbenches. This is useful, as digital circuits can sometimes have complicated and (mostly) disjoint parts that different testbench threads might want to interact with individually.

For the purpose of this blog post, we are going to make some simplifications:

- When the user runs the computation, all the concurrent tasks must be known at that point

- We require all computations to have no return values (i.e., they return

unit)

So what makes this tricky in a pre-effects world? Imagine we want to interleave the

following two computations, with cycle () representing the synchronization points:

[ (fun () ->

for i = 0 to 2 do

cycle (); (* Synchronization point *)

printf "foo %d\n" i;

done)

; (fun () ->

for i = 0 to 2 do

cycle (); (* Synchronization point *)

printf "bar %d\n" i;

done);

]

In the pre-Effects world, the only way we can do this is by using closures. We need

closures because we don’t have a practical way of representing the part of the execution

that comes after the call to cycle.

The most ergonomic way to do this happens to be with monads. You could imagine having a computation monad that looks something like this:

type 'a t =

| Return : 'a -> 'a t

| Bind : ('a t * ('a -> 'b t)) -> 'b t

| Cycle : unit t

val cycle : unit -> unit t

val run_computations : (unit -> unit t) list -> unit

The run_computations bit of code is quite involved so I won’t include it here. (The fact

that it’s so complex is one motivation for using Effects.) The rough intuition is:

- Start at the head of the computation. It will walk it up to a

Bind(Cycle, f)point. It will then set aside and store thefclosure. If the computation evaluation ends up atReturn (), just mark it as done (there is no closure to store in this case). - Move on to the next computation, and do the same thing, until you get to the end of the list.

- Repeat the process until all the computation has been done.

Putting that all together, and the monadic version of our testbench runner may look something like this:

run_computations

[ (fun () ->

for_ 0 ~to_:2 ~do_:(fun i ->

let%bind () = Step.step () in

printf "foo %d\n" i;

Step.return ()))

; (fun () ->

for_ 0 ~to_:2 ~do_:(fun i ->

let%bind () = Step.step () in

printf "bar %d\n" i;

Step.return ()))

]

[%expect

{|

foo 0

bar 0

foo 1

bar 1

foo 2

bar 2

|}]

This of course has all the problems of monads that we talked about earlier. But in a pre-Effects world there isn’t another way to support the notion of a synchronization point.

With OxCaml Effects, we do have a first-class way of representing the “computation to come”. We can have an API that looks something like the following:

module Handler : sig

type t

end

val run_computations : (Handler.t @ local -> unit) list -> unit

val step : Handler.t @ local -> unit

The same code above can be written in a much cleaner style:

let%expect_test "" =

run_computations

[ (fun h ->

for i = 0 to 2 do

step h;

printf "foo %d\n" i

done)

; (fun h ->

for i = 0 to 2 do

step h;

printf "bar %d\n" i

done)

];

[%expect

{|

foo 0

bar 0

foo 1

bar 1

foo 2

bar 2

|}]

;;

Note the interesting bit in this API: whenever a computation calls step, it yields

control to the “step runtime”, as a synchronization point, until all the threads reach

their respective synchronization points. This is a very powerful feature that allows each

of the testbench computations to interact independently with the circuit in the same

state, without having to coordinate their work explicitly. At the synchronization point,

the underlying circuit simulator will advance the circuit by one step, before resuming the

execution of the computations. As high-level programmers interacting with the

Handled_effect library, we don’t need to understand how this all works under the hood.

So, how does one actually go about implementing the above code with effects?

Similar to before, we first define the effect operations. In this case, we need to perform

an operation to yield control to the runtime, which we do with a Step operation. We

also define a step function that the users will interact with.

module Effect_ops = struct

type 'a t = Step : unit t

end

module E = Effect.Make (Effect_ops)

module Handler = E.Handler

(* val step : Handler.t @ local -> unit *)

let step (h : Handler.t @ local) = E.perform h Step

Then, we define some kind of state tracker for the execution of a computation. We encapsulate

this in a Thread_state.t type. The trick here is that when the computation performs

an effect, we don’t actually need to call the continuation immediately. We can have several

concurrent computations in flight, and several continuations.

module Thread_state = struct

type t =

| Unstarted of (Handler.t @ local -> unit)

| Running of (unit, unit, unit) E.Continuation.t Unique.Once.t

(** When the computation state is [Running], it means the computation called

[step] and has suspended its execution. It will synchronize with all other

computations at their respective calls to [step] before advancing.

The continuation object has the unique mode, which means the compiler verifies

that it can only be used exactly once. [Unique.Once.t] is used here

to cross the continuation into the aliased mode (it can be used multiple

times, which is the default in OCaml) to defer this check into runtime

rather than compile time. The exact details of how it works are not too

important for the purposes of this blog post.

*)

| Finished

let handle_result (result : (unit, unit) E.Result.t @ once unique) =

match result with

| Value () ->

Finished

| Exception e ->

Exn.reraise e "Step raised exn"

| Operation (op, k) ->

(match op with

| Step -> Running (Unique.Once.make k))

;;

(* Advance the computation until it calls [step] *)

let run_until_step t =

match t with

| Unstarted computation ->

handle_result (E.run computation)

| Running k_uniq ->

handle_result (Handled_effect.continue (Unique.Once.get_exn k_uniq) () [])

| Finished -> Finished

;;

end

With all these pieces together, we can implement the run_computations function.

let run_computations (computations : (E.Handler.t @ local -> unit) list) =

let states =

Array.map (Array.of_list computations) ~f:(fun computation ->

Thread_state.Unstarted computation)

in

while

Array.exists states ~f:(function

| Finished -> false

| Unstarted | Running _ -> true)

do

Array.map_inplace states ~f:Thread_state.run_until_step

(* In practice, we will advance time on the underlying circuit being simulated. *)

done

;;

This is a simplification of what happens in practice, but not by much! The only primitive

we are missing here is spawn, which allows a computation to spawn further

computations. This is something we can also achieve with Algebraic Effects.

OxCaml’s type safety guarantees, or, Why does a handler have a local mode?

The paper on Locality and Effect Reflection goes through the formal argument for why our system uses a local mode for typesafe effects. Here I will attempt to give a brief intuitive explanation.

Recall that we define a computation with an effect E as E.Handler.t @ local -> 'a.

When we call E.run on such a computation, it evaluates the function in an environment that can

intercept calls to E.perform to yield control to the effect operations handler. In

other words, we can’t arbitrarily call E.perform outside the context of having

registered effect handlers for E.

Suppose we could run computations of type E.Handler.t -> 'a, without the local

annotation on the handler. One can see how you could express ill-defined logic in this case:

let global_handler = E.run (fun h -> h) in

E.perform global_handler operation

Inside E.run, we are in the context with an appropriate effects operation handler.

But what happens when we call E.perform global_handler operation? E is no longer handled!

The @ local stops us from doing this. The above code snippet will fail with a compile

error due to a mode error.

(* File "fail_compilation_example.ml", line 82, characters 39-40: This value is local but is

expected to be global.

|

|

| *)

let global_handler = E.run (fun h -> h) in

E.perform global_handler operation

Wrapping up

I hope this post helps convince you that effects > monads in many cases, and that it’s

worth giving the Handled_effect library a

look.