This post is aimed at readers who are already familiar with stochastic gradient descent (SGD) and terms like “batch size”. For an introduction to these ideas, I recommend Goodfellow et al.’s Deep Learning, in particular the introduction and, for more about SGD, Chapter 8. The relevance of SGD is that it has made it feasible to work with much more complex models than was formerly possible.

There is a lot of talk about batch size in stochastic gradient descent (SGD). I will argue in this post that batch size mostly doesn’t matter (as long as it’s not too large), but it can seem to matter when you express SGD in terms of gradients averaged over batches rather than summed. I personally think that expressing SGD in terms of gradients averaged over batches is an unfortunate historical accident that leads to unnecessary confusion about the role of batch size.

A recent preprint by Samuel Smith and Quoc Le (2017) lends some empirical support to the notion that batch size doesn’t matter (though the authors frame it very differently!). They also propose rules for how batch size is related to learning rate, training set size, and momentum, though essentially the same rules already appear in Mandt et al. (2017). Smith and Le treat SGD as a stochastic differential equation (SDE), as do Mandt et al., who also cite Kushner and Yin (2003) and Ljung et al. (2012) as earlier examples. The empirical evidence that Smith and Le’s work gives for the rules proposed by Mandt et al. is valuable.

Anyway, on to the batch size bashing.

Two ways to write vanilla SGD

Consider vanilla SGD with batch size B. If we are estimating θ, each step can be written as

where gavg is gradient averaged over the batch and ε is the learning rate. One could alternatively write

where gsum is gradient summed over the batch; the term “learning rate” is already taken, so let’s call τ temperature (for reasons which will soon become evident). The above two ways of writing SGD are equivalent when ε/B = τ.

I claim that τ matters and batch size mostly doesn’t (provided it isn’t too large). If you fix a particular ε, however, and then vary B, then B will seem to matter a lot—but this will be mostly because by fixing ε you have tied τ and B together, and temperature τ does matter.

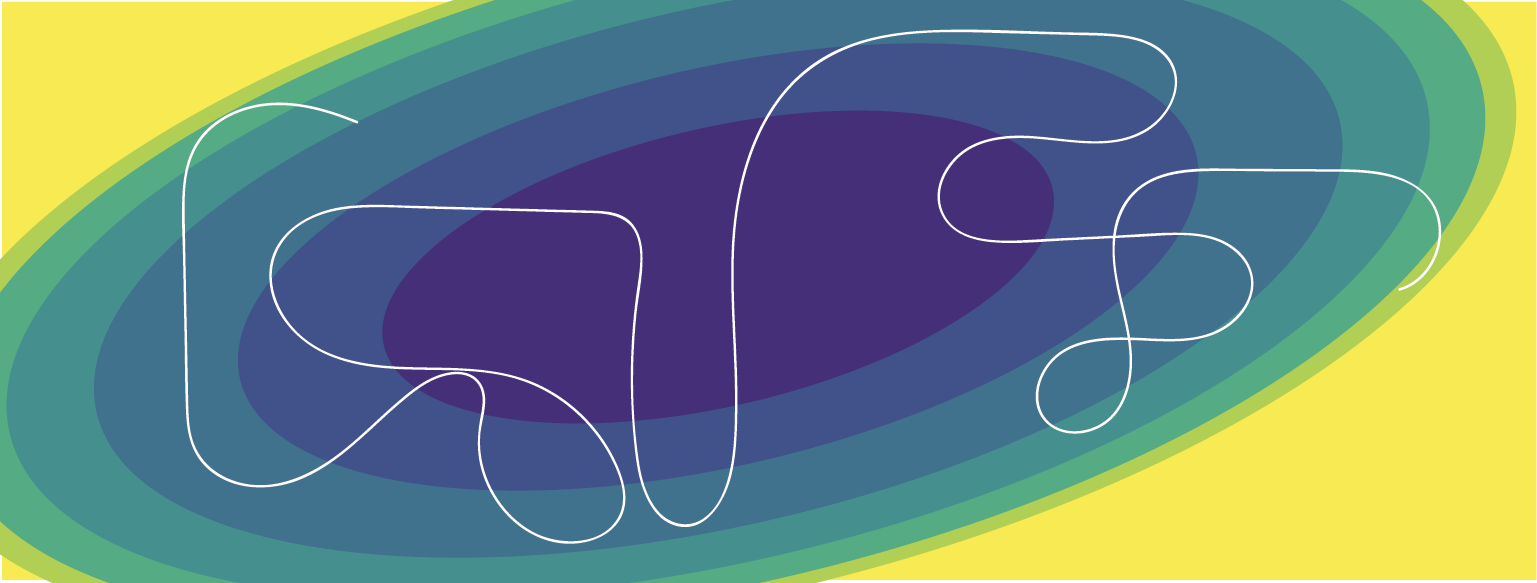

Consider Figure 5a from Smith and Le, where test accuracy is plotted as a function of B for several different values of ε, which by eyeballing I have crudely reproduced here as the graph on the left. Because ε has a fixed value on each curve, the value of B appears to matter. If you take that data and present it as a function of τ for several values of B, you get the graph on the right:

I have conveniently dropped the points where ε = 10—those were points where B is too large, and batch size definitely does matter (in a bad way) when it is too large.

This suggests that test performance is a function of temperature and that B doesn’t matter (again, provided it isn’t too large). This is a stronger statement than claiming the optimal B is linear in ε. If the peaks in the left graph had different heights, we would still have the same scaling rule without test performance being a function of just temperature. The fact that the peaks have the same height is telling us something beyond the scaling rule.

In high-level terms: If you are numerically solving an SDE, then once you get below a certain step size, the noise dominates the discretization error, so further reductions in step size don’t have much effect on the distribution you get; here, B is playing the role of step size, so this is saying B doesn’t matter much once it’s small enough that you’re doing a decent job of solving this SDE. On the other hand, if you insert a factor of 1/B into this SDE (which is what you’re doing when you use gradient averaged over a batch rather than summed) then changing B is changing the SDE which will have a big effect on the distribution you get. But it’s not because of step size, it’s the factor of 1/B in the SDE that really explains the difference.

Likewise, since τ matters and B doesn’t, one should expect there to be a single optimal τ without regard to B. Since τ = ε/B, the proportionality rule Bopt ∝ ε illustrated in Figure 5b of Smith and Le is a consequence of this.

There could very well be interesting 2nd-order effects in the choice of batch size, but they are obscured by this 1st-order effect you get when you fix an ε and turn B into a proxy for τ. More importantly, there could be separate reasons to want a particular batch size, perhaps for performance, or for tuning batch normalization. By thinking in terms of τ rather than ε, you are more free to choose B according to these other criteria.

SGD as a sampler rather than optimizer

Now consider how these parameters should vary depending on training set size N. Smith and Le, and earlier work such as Mandt et al. (2017), propose the rule B ∝ εN. This can be equivalently stated as τ ∝ 1/N, and I think this latter formulation makes more clear that batch size isn’t a major consideration.

I prefer to arrive at τ ∝ 1/N more via Mandt et al.’s approach, which views SGD as a sampler rather than an optimizer. To motivate this point of view, suppose you could choose between the following (the silly numbering will be justified later):

- You observe N data points, and use the max-likelihood θ (or perhaps MAP θ) to make predictions.

- You observe N data points, and draw a single θ from your posterior, which you use to make predictions.

If you are in a context where asymptotic normality of MLE applies, you should favor (0). But if your posterior is some hairy high-dimensional thing, crisscrossed with deep but narrow ravines, (0) is awful1 and (1) is decent to very good. (Ideally you’d integrate over your posterior, and (1) is just an approximation of this that integrates for 1 point. That such a 1-point integral can do a good job might be counterintuitive, and I might say more about this in a future post.)

So, I think it is better to treat the aim of SGD as being an approximation of (1) rather than an approximation of (0). This is the context of Mandt et al., though I frame it here a little differently than they do—in particular, they write things in terms of ε rather than τ, which unnecessarily pulls batch size into the picture. I think the perspective of treating SGD as sampling from a distribution is a more compelling way to understand SGD’s generalization properties than to think of it in terms of preferring certain kinds of minima over other kinds of minima. (Among other things, if you’re not decreasing learning rate to 0, you’re not finding any minima.)

For a given choice of temperature τ, the SGD process will, in the long run, approximately follow some Boltzmann distribution exp(-E(θ)/τ). What we would like is for this to resemble our posterior, which looks like exp(-L(θ)/(1/N)) where L(θ) is negative log-likelihood, averaged over the dataset.2 This suggests the scaling rule τ ∝ 1/N. (I don’t think there’s anything too deep here: if you double the number of data points, the influence of any one point should get cut in half.)

Substituting τ = ε/B, this becomes the rule ε ∝ B/N which Mandt et al. propose on page 9. Smith and Le write this in the equivalent form B ∝ εN.

Back to the choice presented above: what if you were in a case where (0) is better, or you wanted something in between? Observe that (0) and (1) are special cases of the same more general rule; if your posterior is exp(-ℓ(θ)), then they are both sampling from exp(-ℓ(θ)/T): (0) is the limiting case as T→0, and (1) is the case T=1. So maybe there’s some fudge factor that should be included in temperature to get the right balance between (0) and (1)—i.e., you’d like to integrate over your posterior, but once you’re told your integral only gets to have a single point, you might want to “sharpen” your posterior before the point is drawn. But this T is absorbed as a multiplicative factor in τ, so if you are tuning τ based on validation performance, you get tuning of T for free.

Burn-in

We have glossed over the issue of burn-in by talking in terms of what temperature you should want in order to get good long-term behavior out of SGD. For very large data sets, if you use this temperature throughout training, you are not going to be running long enough for your process to resemble a stationary process.

In particular, while we expect the optimal long-term temperature to obey the scaling rule τ ∝ 1/N, the optimal initial temperature probably should not vary much with training set size N. (This is consistent with the general consensus that you should start with a high learning rate that is decreased during training.) Temperature is how much you change your mind about θ when shown another data point. With twice as much data, in the long run you should be changing your mind half as much on each point—that’s the τ ∝ 1/N rule. But in terms of getting to a reasonable belief early in training, that first point should change your mind the same amount regardless of how many more points you’re going to get to see. A different way to put this: You probably want to crank up the temperature at first for the sake of mixing even if that higher temperature would yield the wrong long-term behavior if you maintained it.3

Note that lower temperatures are going to tolerate larger batch sizes (in terms of SDEs, the slower your state is changing, the larger the time steps you can get away with while still doing a reasonable job of solving the SDE), so a large batch size that might be acceptable at the low temperatures occurring late in training might be too large during early training at a higher temperature, and you might want to use smaller batch sizes during early high-temperature training.

Momentum

The same “batch size doesn’t really matter” ideas apply if you use SGD with momentum, provided the momentum decay is stated in terms of “decay per point” rather than “decay per batch”, and velocity is also expressed in per-point rather than per-batch terms. For algorithms like RMSProp and Adam, there is the further complication that gradients are divided by some running estimate of standard deviation of gradient; here, I’m guessing you can still get (approximate) indifference to batch size, but you must state the algorithms in terms of estimating what the standard deviations of the per-point gradients are. (That doesn’t require any changes to the libraries you’re using—you just work with one parametrization in your own code and translate it to the convention of the code you’re calling.)

There’s also the question of how to make temperature maintain roughly the same meaning across different values for the momentum damping parameter μ (think of 1−μ as decay per point). One must write the velocity updates as vnew =(1−μ)B vold − τμgsum. (The factor of μ when gradient is added to velocity corrects for the fact that, once present, it will affect θ for many steps.) One should still expect τ ∝ 1/N. Relating to ε, B, and m (momentum decay per batch) one has m = (1−μ)B and τ = ε/((1−m)B) which yields the rule B ∝ εN/(1−m) that essentially appears in Smith and Le and Mandt et al. (once notation is accounted for). I don’t mean to suggest that this makes momentum somehow inconsequential; in particular, momentum can affect how quickly you mix.

References

Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016. Available online.

Harold J. Kushner and George Yin. Stochastic approximation and recursive algorithms and applications, volume 35. Springer Science & Business Media, 2003.

Lennart Ljung, Georg Ch. Pflug, and Harro Walk. Stochastic approximation and optimization of random systems, volume 17. Birkhäuser, 2012.

Stephan Mandt, Matthew D. Hoffman, and David M. Blei. Stochastic gradient descent as approximate Bayesian inference. arXiv preprint arXiv:1704.04289, 2017.

Samuel L. Smith and Quoc V. Le. A Bayesian perspective on generalization and stochastic gradient descent. arXiv preprint arXiv:1710.06451, 2017.

-

The awfulness of (0) in the hairy high-dimensional case is often seen in practice, but does it exist in principle? If you know you’re going to be using a MAP estimate rather than integrating over your posterior, this can influence the design of the thing you call your prior in order to make (0) work better. For more information, consult an MDL adherent.

-

A separate question is how to make E(θ) resemble L(θ), and this is much of what Mandt et al. is about.

-

Even with this higher initial temperature, you still might not get good mixing, since the “good” parts of your posterior can be difficult to find regardless of algorithm. (Write your favorite NP-hard problem in terms of a Boltzmann distribution…) This is more speculative, but it seems that the temperature that would be optimal if you did reach the stationary distribution will still have good generalization properties even if you never manage to find your way out of some suboptimal region of the model space.